AWS S3 extension configuration

Digital asset management (DAM) Unbundled: Extension License: Special license[1]

This page explains how to configure the connection between the External DAM module and your AWS S3 external asset management solution.

Essentially, you:

-

Set up IAM policy permissions.

-

Provide connection credentials for S3.

-

Enable the subapp to display in the Magnolia Assets app.

-

Configure the extension.

AWS IAM Policy

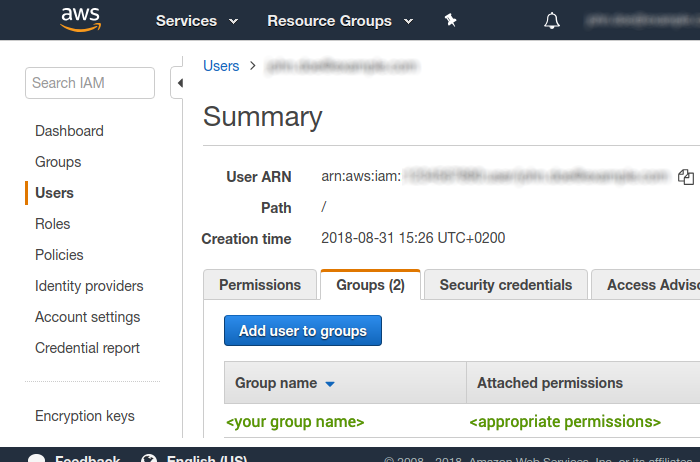

Make sure that you have acquired appropriate permissions for the service in the AWS IAM Management Console.

Licensing

The associated framework is included with your DX Core license and is free of charge. However, this extension is paid and requires an additional special license.

Minimum required permissions

To use Magnolia with AWS S3, the following permissions are required.

Based on your project requirements, you need to decide which specific S3 buckets these permissions apply to and specify them in your policy’s Resource section.

The example below shows permissions granted to all buckets; you should implement a more restrictive policy.

|

-

s3:ListBucket -

s3:GetBucketLocation -

s3:ListAllMyBuckets -

s3:GetObject -

s3:PutObject -

s3:DeleteObject -

s3:PutObjectAcl -

s3:GetObjectAcl -

s3:HeadObject -

s3:CopyObject -

s3:CreateBucket

For more information about these permissions, see the Amazon documentation Actions defined by Amazon S3 page.

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "AllowS3Operations",

"Effect": "Allow",

"Action": [ (1)

"s3:ListBucket",

"s3:GetBucketLocation",

"s3:ListAllMyBuckets",

"s3:GetObject",

"s3:PutObject",

"s3:DeleteObject",

"s3:PutObjectAcl",

"s3:GetObjectAcl",

"s3:HeadObject",

"s3:CopyObject",

"s3:CreateBucket"

],

"Resource": [ (2)

"arn:aws:s3:::*",

"arn:aws:s3:::*/*"

]

}

]

}| 1 | Grant access for the list of bucket and object-level permissions. |

| 2 | List the AWS S3 buckets to which you want these permissions to apply. In this example, the permissions apply to all buckets; you should implement a more restrictive policy based on your specific requirements. |

| Buckets created via the external DAM connector don’t have ACLs and have blocked public access; you must adjust them to enable public access. |

Configuring the AWS connection

The magnolia-aws-foundation module handles all Amazon connections from Magnolia.

It’s installed automatically by Maven when you install any AWS-dependent module.

|

To use AWS in Magnolia, you must have a working AWS account.

You need AWS credentials to connect AWS to Magnolia. Credentials consist of:

-

AWS access key ID

-

AWS secret access key

-

Optionally, a session token (when using the AWS default credential provider chain)

Generate the key in the security credentials section of the Amazon IAM Management Console. In the navigation bar on the upper right, choose your user name, and then choose My Security Credentials. You can store your AWS credentials using:

-

Magnolia Passwords app (session tokens aren’t supported in the app)

-

AWS default credential provider chain

Using the Passwords app

Add your generated access key ID and the secret access key to your Magnolia instance in the Passwords app using the following names and order:

📁 |

|

|

|

|

Using the AWS default credential provider chain

The AWS SDK uses a chain of sources to look for credentials in a specific order. For more information, see Default credentials provider chain.

-

Set your AWS credentials by following the instructions in the AWS documentation: Provide temporary credentials to the SDK.

For a more secure implementation using the default credential provider chain, we recommend using a session token, which expires, rather than a permanent user token.

-

Disable Magnolia’s internal credential handling by doing one of the following:

-

Adding the following configuration properties to your

WEB-INF/config/default/magnolia.propertiesfile:magnolia.aws.validateCredentials=false magnolia.aws.useCredentials=false -

Using JVM arguments as shown in the next step.

-

-

Set your AWS session or user token. AWS credentials can be injected using environment variables or JVM system properties. For more details, see Default credentials provider chain and Configure access to temporary credentials.

Example configuration with a session token and JVM arguments-Dmagnolia.aws.validateCredentials=false(1) -Dmagnolia.aws.useCredentials=false(1) -Daws.accessKeyId=$AWS_ACCESS_KEY_ID(2) -Daws.secretAccessKey=$AWS_SECRET_ACCESS_KEY(2) -Daws.sessionToken=$AWS_SESSION_TOKEN(2)(3)1 Disables Magnolia’s internal credential handling using JVM properties. 2 JVM properties to inject environment variables containing the AWS credentials. Ensure that your environment variables AWS_ACCESS_KEY_ID,AWS_SECRET_ACCESS_KEY, andAWS_SESSION_TOKENare set.3 AWS_SESSION_TOKENis optional.Example configuration with a permanent user token-Dmagnolia.aws.validateCredentials=false -Dmagnolia.aws.useCredentials=false -Daws.accessKeyId=<your-access-key-id> -Daws.secretAccessKey=<your-secret-access-key>

Enabling your subapp

By default, the AWS S3 subapp is disabled.

To enable it, decorate the Magnolia Assets app under <dam-connector>/decorations/dam and set the enabled property to true.

providers:

s3Provider:

identifier: s3

enabled: true

class: info.magnolia.external.dam.s3.datasource.S3AssetProviderConfiguration

You can create or edit the configuration in the JCR or the File System (YAML) under magnolia-external-dam-s3/external-dams/<definition-name>.

Single asset provider for link fields

You can configure a link field to limit your users to selecting assets from only one asset provider.

This is possible from External DAM 1.0.7 (DAM module 3.0.8). Previously, you had to use the aggregated damLinkField and users had to choose from all the enabled asset providers.

|

This example shows a dialog definition with two link fields, each limited to different asset providers:

form:

properties:

s3:

$type: damLinkField

chooserId: dam-s3:chooser

datasource:

class: info.magnolia.dam.app.data.AssetDatasourceDefinition

name: s3

jcr:

$type: linkField

converterClass: info.magnolia.ui.editor.converter.JcrAssetConverter

datasource:

$type: jcrDatasource

workspace: websiteAWS S3 bucket whitelist

By default, all the buckets in the S3 account you connect to are displayed. If you don’t want to display all your buckets, you can configure a whitelist in the Resource files app using regex.

For example:

whitelistedBuckets:

- "^a.*"

- a-particular-bucketAdding a filter capability to S3 buckets

If you want to restrict access to a single bucket, either because it’s used in different use cases or for some confidentiality-related reasons, you can add a bucket prefix. Using a bucket prefix grants access only to that specific bucket without revealing its actual path which might contain unwanted access information. This way the user sees the bucket and folders as the only folders in the Magnolia instance.

To use the filter, add the following bucket prefix to your config.yaml file:

whitelistedBuckets:

- BUCKET_NAME

bucketPrefixes:

BUCKET_NAME: “FOLDER_NAME/SUBFOLDER_NAME”Cache

This integration framework uses Caffeine, a high-performance Java cache library, to manage caching for external assets via a Magnolia helper module called magnolia-addon-commons-cache.

For S3 caching, you can specify the behavior of the caches. Essentially, you can configure all the parameters available in CaffeineSpec.

For example:

-

Set a maximum cache size.

-

Define how often the cache expires.

-

Enable or disable the cache.

You configure caching through decoration under /<dam-connector>/decorations/addon-commons-cache/config.yaml.

cacheConfigurations:

s3:

caches:

s3-objects: maximumSize=500, expireAfterAccess=10m

s3-buckets: expireAfterAccess=60m

s3-count: maximumSize=500, expireAfterAccess=10m

s3-pages: maximumSize=500, expireAfterAccess=10mCache configuration properties

| Property | Description |

|---|---|

|

required Use CaffeineSpec properties to specify the cache behavior such as the maximum size of the cache and when cache entries expire for each cache.

For example:

|

|

required, Set to |

Manually flushing the cache

If you want to flush the cache, you can either set the cache expiry to a very short time (see above) or configure a script in the Groovy app to flush the cache manually. For example:

import info.magnolia.objectfactory.Components

cacheManager = Components.getComponent(info.magnolia.addon.commons.cache.CacheManager)

cacheManager.evictCache("s3-objects")Pagination

Magnolia retrieves pages of objects from S3 and then obtains the assets you require from those pages. The pages are cached.

You can configure the size of a page of objects using the property pageSize to improve response times.

pageSize: 100| Property | Description |

|---|---|

|

optional For S3, the minimum number of objects per request retrieved from S3 possible is 100. The maximum is 1000. |

Maximum search time for S3

You can configure the maximum time Magnolia Periscope spends searching for S3 assets to be displayed in the Magnolia interface when a user searches for an asset in the Find Bar.

maxSearchTimeInMilis: 10000| Property | Description |

|---|---|

|

optional The maximum time Magnolia Periscope spends searching for S3 assets. By default, the maximum search time is 10 seconds. For example: maxSearchTimeInMilis: 10000 |

Presigned URLs to enable previewing for S3 assets

When you upload assets via Magnolia to S3, they’re set to private by default. If you want to keep the preview functionality available, you must use presigned URLs.

presignedSignatureDurationInMinutes: 60| Property | Description |

|---|---|

|

optional Determines how long a presigned URL is valid. We recommend that the duration is at least equal to the expiration of the caches set for s3-objects`, For example: |

Default AWS region

By default, Magnolia sets the default AWS region to us-west-2.

If your organization can’t support the default for compliance reasons, you can set a different default AWS region for the S3 connector.

For example, set the defaultRegion property to “cn-northwest-1“ to get buckets in the China region.

defaultRegion: "cn-northwest-1"AWS regions list

When creating an S3 bucket in the Assets app, only AWS-enabled regions are displayed to users.

| AWS S3 bucket names are globally unique, meaning no two buckets can have the same name. If you try to create a bucket and receive an error stating the bucket already exists, it means that the bucket name is already in use, either by you or someone else. |

If your users want to use a disabled region, you must enable it first in AWS as described in Managing AWS Regions in the AWS documentation.

Then you must remove the newly enabled region from the list of disabled regions in the Magnolia configuration so that it appears in the chooser.

disabledRegions:

- "ap-east-1"

- "af-south-1"

- "eu-south-1"

- "me-south-1"

- "cn-north-1"

- "cn-northwest-1"

- "us-gov-east-1"

- "us-gov-west-1"

- "us-iso-east-1"

- "us-isob-east-1"Access buckets in multiple regions

In 2.0.6 and later, you can access buckets from non-default AWS regions. Previously, buckets from other regions were not listed and could not be accessed.

The optional extraBuckets configuration property lets you specify additional buckets to display, even if they are outside the default region.

disabledRegions:

- "ap-east-1"

- "af-south-1"

extraBuckets:

- "other-bucket"

defaultRegion: "us-east-2"Thread pool for S3 search operations

You can control the allocation of system resources for handling search requests in the S3 connector.

searchThreadPoolIdleLiveTime: 30L| Property | Description |

|---|---|

|

optional default is Sets the time (in seconds) that the thread is held idle in the pool before being terminated. This helps to optimize resource usage by reducing the number of idle threads when they’re not needed. |

Number of child objects when renaming folders

When you rename a folder, all the child objects are retrieved via API and aligned accordingly.

The maxKeysRenaming property determines the maximum number of keys returned by the API call.

The higher the value of the property, the longer the action takes to complete.

maxKeysRenaming: 1000| Property | Description |

|---|---|

|

optional, default is The maximum number of keys returned by the API call retrieving children objects from S3 during folder renaming. Valid range is [100-1000] |

Asset width and height storage in metadata

When you create an asset in Magnolia, the storeWidthAndHeight property determines if the width and height of the asset are stored in the corresponding S3 item as metadata.

storeWidthAndHeight: false| Property | Description |

|---|---|

|

optional, default is Set to |

Hiding the bucket name

The hideBucket property determines if the S3 bucket name is displayed in the Assets app or not.

When set to true, the bucket name no longer appears in the app folder names and asset descriptions.

hideBucket: false| Property | Description |

|---|---|

|

optional, default is Set to |